Some peeps over the the University of York are organising a conference on the advances in visual methods for linguistics (AVML) to take place in September next year. This might be of interest to evolutionary linguists who use things like phylogenetic trees, networks, visual simulations or other fancy dancy visual methods. The following is taken from their website:

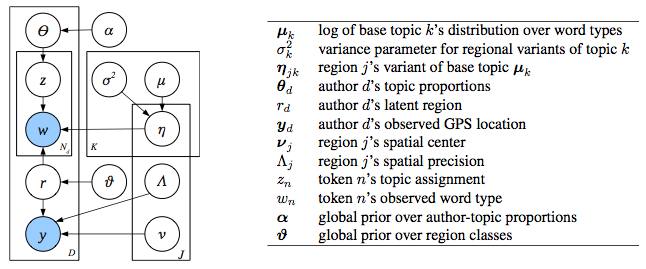

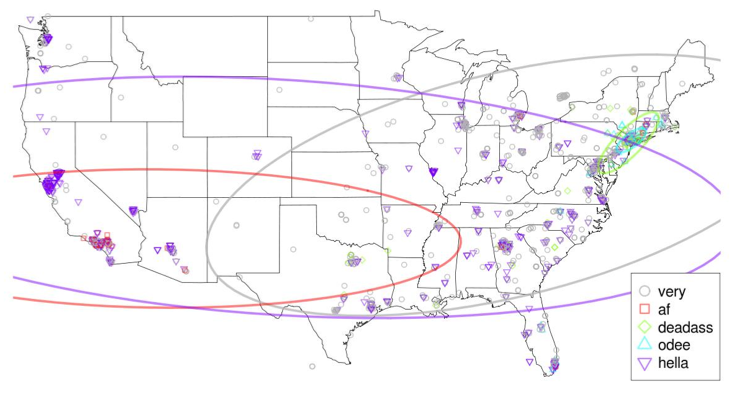

Linguistics, like other scientific disciplines, is centrally reliant upon visual images for the elicitation, analysis and presentation of data. It is difficult to imagine how linguistics could have developed, and how it could be done today, without visual representations such as syntactic trees, psychoperceptual models, vocal tract diagrams, dialect maps, or spectrograms. Complex multidimensional data can be condensed into forms that can be easily and immediately grasped in a way that would be considerably more taxing, even impossible, through textual means. Transforming our numerical results into graphical formats, according to Cleveland (1993: 1), ‘provides a front line of attack, revealing intricate structure in data that cannot be absorbed in any other way. We discover unimagined effects, and we challenge imagined ones.’ Or, as Keith Johnson succinctly puts it, ‘Nothing beats a picture’ (2008: 6).

So embedded are the ways we visualize linguistic data and linguistic phenomena in our research and teaching that it is easy to overlook the design and function of these graphical techniques. Yet the availability of powerful freeware and shareware packages which can produce easily customized publication-quality images means that we can create visual enhancements to our research output more quickly and more cheaply than ever before. Crucially, it is very much easier now than at any time in the past to experiment with imaginative and innovative ideas in visual methods. The potential for the inclusion of enriched content (animations, films, colour illustrations, interactive figures, etc.) in the ever-increasing quantities of research literature, resource materials and new textbooks being published, especially online, is enormous. There is clearly a growing appetite among the academic community for the sharing of inventive graphical methods, to judge from the contributions made by researchers to the websites and blogs that have proliferated in recent years (e.g. Infosthetics, Information is Beautiful, Cool Infographics, BBC Dimensions, or Visual Complexity).

In spite of the ubiquity and indispensability of graphical methods in linguistics it does not appear that a conference dedicated to sharing techniques and best practices in this domain has taken place before. This is less surprising when one considers that relatively little has been published specifically on the subject (exceptions are Stewart (1976), and publications by the LInfoVisgroup). We think it is important that researchers from a broad spectrum of linguistic disciplines spend time discussing how their work can be done more efficiently, and how it can achieve greater impact, using the profusion of flexible and intuitive graphical tools at their disposal. It is also instructive to view advances in visual methods for linguistics from a historical perspective, to gain a greater sense of how linguistics has benefited from borrowed methodologies, and how in some cases the discipline has been at the forefront of developments in visual techniques.