It has long been obvious to me that the so-called cognitive revolution is what happened when computation – both the idea and the digital technology – hit the human sciences. But I’ve seen little reflection of that in the literary cognitivism of the last decade and a half. And that, I fear, is a mistake.

Thus, when I set out to write a long programmatic essay, Literary Morphology: Nine Propositions in a Naturalist Theory of Form, I argued that we think of literary text as a computational form. I submitted the essay and found that both reviewers were puzzled about what I meant by computation. While publication was not conditioned on providing such satisfaction, I did make some efforts to satisfy them, though I’d be surprised if they were completely satisfied by those efforts.

That was a few years ago.

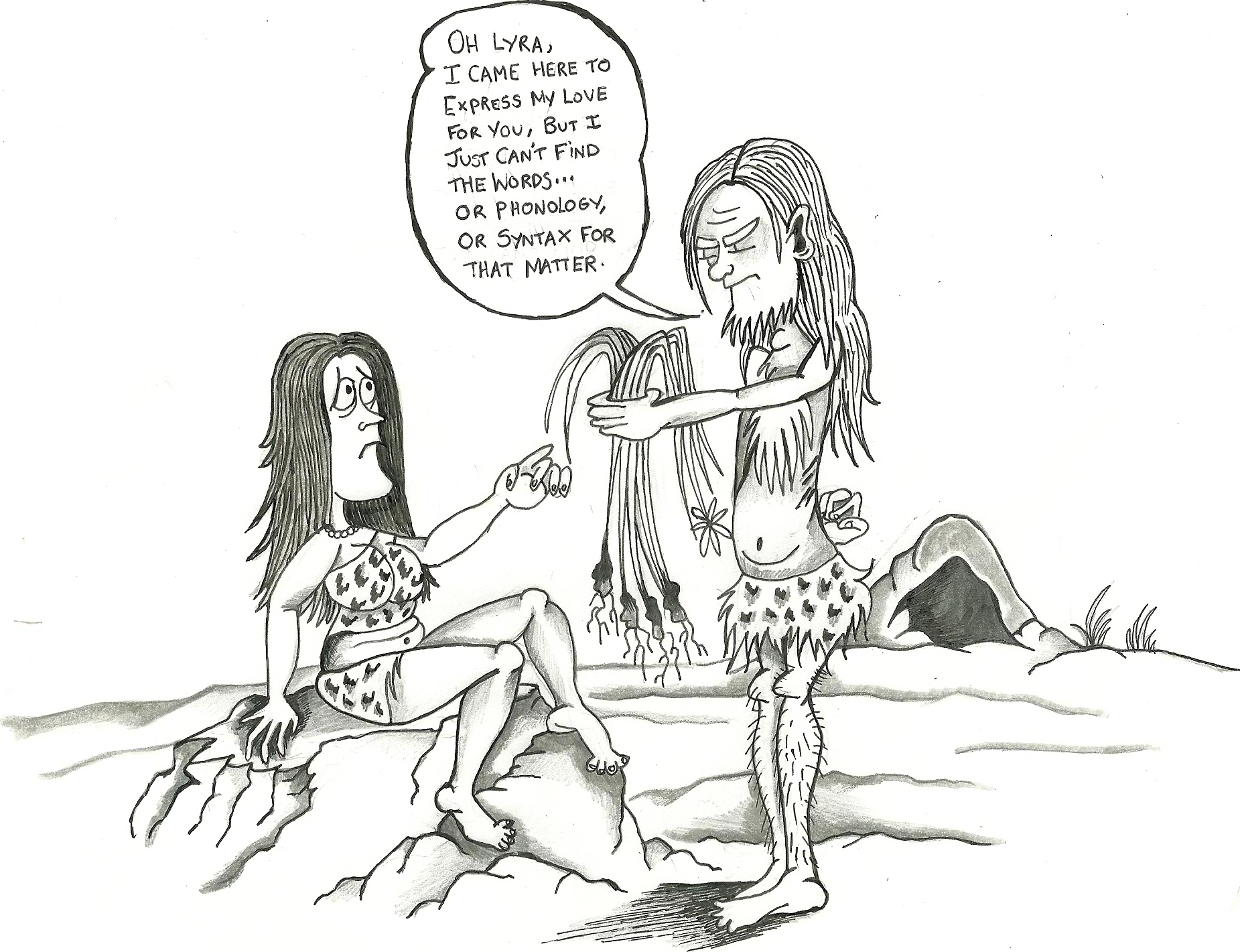

Ever since then I pondered the issue: how do I talk about computation to a literary audience? You see, some of my graduate training was in computational linguistics, so I find it natural to think about language processing as entailing computation. As literature is constituted by language it too must involve computation. But without some background in computational linguistics or artificial intelligence, I’m not sure the notion is much more than a buzzword that’s been trendy for the last few decades – and that’s an awful long time for being trendy.

I’ve already written one post specifically on this issue: Cognitivism for the Critic, in Four & a Parable, where I write abstracts of four texts which, taken together, give a good feel for the computational side of cognitive science. Here’s another crack at it, from a different angle: symbol processing.

Operations on Symbols

I take it that ordinary arithmetic is most people’s ‘default’ case for what computation is. Not only have we all learned it, it’s fundamental to our knowledge, like reading and writing. Whatever we know, think, or intuit about computation is built on our practical knowledge of arithmetic.

As far as I can tell, we think of arithmetic as being about numbers. Numbers are different from words. And they’re different from literary texts. And not merely different. Some of us – many of whom study literature professionally – have learned that numbers and literature are deeply and utterly different to the point of being fundamentally in opposition to one another. From that point of view the notion that literary texts be understood computationally is little short of blasphemy.

Not so. Not quite.

The question of just what numbers are – metaphysically, ontologically – is well beyond the scope of this post. But what they are in arithmetic, that’s simple; they’re symbols. Words too are symbols; and literary texts are constituted of words. In this sense, perhaps superficial, but nonetheless real, the reading of literary texts and making arithmetic calculations are the same thing, operations on symbols. Continue reading “Cognitivism and the Critic 2: Symbol Processing”