A recent NPR radio show covered the research of the biosemiotician Con Slobodchikoff of the Univeristy of Arizone on prairie dog calls. The piece is very public-orientated, but still might be worth listening to.

A recent NPR radio show covered the research of the biosemiotician Con Slobodchikoff of the Univeristy of Arizone on prairie dog calls. The piece is very public-orientated, but still might be worth listening to.

We’ve all (I hope) heard of the vervet monkeys, which have different alarm calls for different predators, such as for leopard (Panthera pardus), martial eagle (Polemaetus bellicosus), and python (Python sebae). (Seyfarth et al. 1980) For each of these predators, an inherent and unlearned call is uttered by the first spectator, after which the vervet monkeys respond in a suitable manner – climb a tree, seek shelter, etc. It appears, however, that prairie dogs have a similar system, and that it is a bit more complicated.

We’ve all (I hope) heard of the vervet monkeys, which have different alarm calls for different predators, such as for leopard (Panthera pardus), martial eagle (Polemaetus bellicosus), and python (Python sebae). (Seyfarth et al. 1980) For each of these predators, an inherent and unlearned call is uttered by the first spectator, after which the vervet monkeys respond in a suitable manner – climb a tree, seek shelter, etc. It appears, however, that prairie dogs have a similar system, and that it is a bit more complicated.

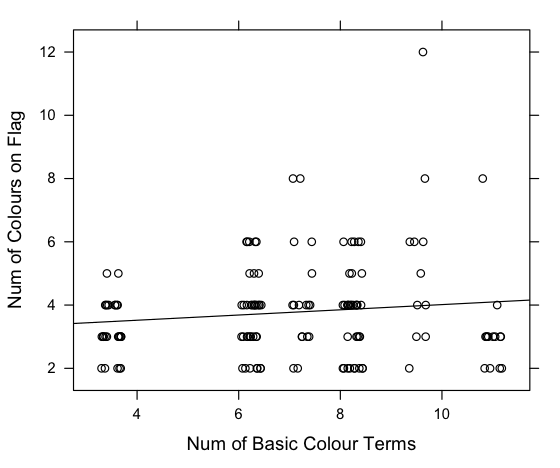

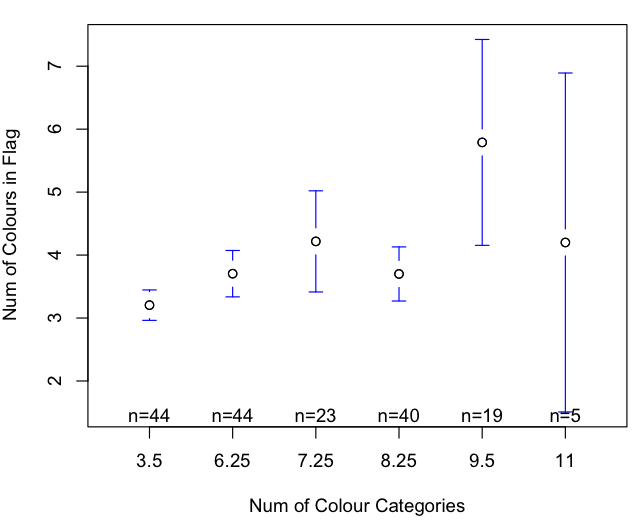

Slobodchikoff conducted a study where three girls (probably underpaid, underprivaleged, and underappreciated (under)graduate students) walked through a prairie dog colony wearing shirts of the colors green, yellow, and blue. The call of the first prairie dog to notice them was recorded, after which the prairie dogs all fled into their burrows. The intern then walked through the entire colony, took a break for ten minutes, changed shirts, and did it again.

What is interesting is that the prairie dogs have significantly different calls (important, as they are pretty much exactly the same to human ears) for blue and yellow, but not for yellow and green. This is due to the dichromatic nature of praire dog eyesight (for a full study of the eyesight of retinal photoreceptors of subterranean rodents, consult Schleich et al. 2010). The distinction between blue and yellow is important, however, as there isn’t necessarily any reason that blue people are any more dangerous to praire dogs than yellow ones. “This in turn suggests that the prairie dogs are labeling the predators according to some cognitive category, rather than merely providing instructions on how to escape from a particular predator or responding to the urgency of a predator attack.” (Slobodchikoff 2009, pp. 438)

Another study was then done where two towers were built and a line was strung between them. When cut out shapes were slung down the line, the prairie dogs were able to distinguish a triangle from a circle, but not a circle from a square. So, the prairie dogs are not entirely perfect at encoding information. The conclusion still stands however that more information is encoded in the calls than is entirely relevant to a suitable reaction (unless one were to argue that evolutionary pressure existed on prairie dogs to distinguish blue predators from yellow ones.)

NPR labels this ‘prairiedogese’, which makes me shiver and reminds me of Punxatawney Pennsylvania, where Bill Murray was stuck on a vicious cycle in the movie Groundhog Day, forced every day to watch the mayor recite the translated proclamation of the Groundhog, which of course spoke in ‘groundhogese’. Luckily, however, there won’t be courses in this ‘language’.

References:

Schleich, C., Vielma, A., Glösmann, M., Palacios, A., & Peichl, L. (2010). Retinal photoreceptors of two subterranean tuco-tuco species (Rodentia, Ctenomys): Morphology, topography, and spectral sensitivity The Journal of Comparative Neurology, 518 (19), 4001-4015 DOI: 10.1002/cne.22440

Seyfarth, R., Cheney, D., & Marler, P. (1980). Monkey responses to three different alarm calls: evidence of predator classification and semantic communication Science, 210 (4471), 801-803 DOI: 10.1126/science.7433999

Slobodchikoff CN, Paseka A, & Verdolin JL (2009). Prairie dog alarm calls encode labels about predator colors. Animal cognition, 12 (3), 435-9 PMID: 19116730

A

A