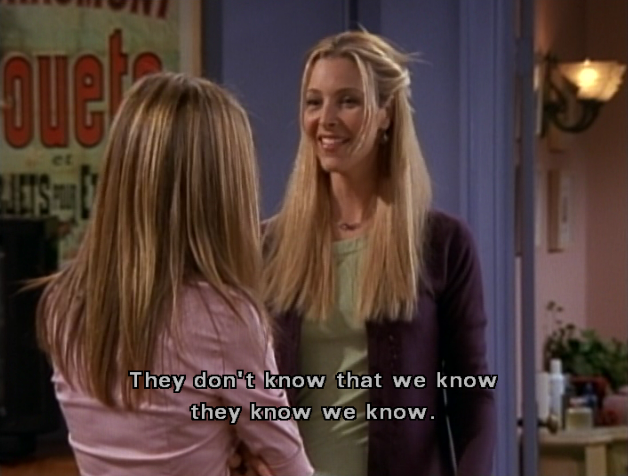

A large part of human humour depends on understanding that the intention of the person telling the joke might be different to what they are actually saying. The person needs to tell the joke so that you understand that they’re telling a joke, so they need to to know that you know that they do not intend to convey the meaning they are about to utter… Things get even more complicated when we are telling each other jokes that involve other people having thoughts and beliefs about other people. We call this knowledge nested intentions, or recursive mental attributions. We can already see, based on my complicated description, that this is a serious matter and requires scientific investigation. Fortunately, a recent paper by Dunbar, Launaway and Curry (2015) investigated whether the structure of jokes is restricted by the amount of nested intentions required to understand the joke and they make a couple of interesting predictions on the mental processing that is involved in processing humour, and how these should be reflected in the structure and funniness of jokes. In today’s blogpost I want to discuss the paper’s methodology and some of its claims.

Previous research by Dunbar and colleagues (e.g. Kinderman et al., 1998; Stiller and Dunbar, 2007) has found that people are actually quite good in these nested mental representations, but only up to the fifth level (e.g. “I know (1) that you believe (2) that he thinks (3) that she wants (4) you to believe (5) that x”). If it gets any more complex, they start to perform at chance level, effectively guessing. Based on this argument, Dunbar et al. (2015) investigated the complexity of these mental attributions in jokes. A joke about other people is potentially more complex than one about oneself. If these people have thoughts and beliefs about each other, then things get even more complex. To keep track, we can number these mental states, too: For example, in joke #4 the comedian has to intend (1) that the audience understands (2) that the comedian intends (3) to tell a joke about a boy how intends (4) the barber not to know (5) that the boy knows (6) how the game works.

The main premise of the paper is that jokes should have a limit on nested mental representations of three levels – after which jokes should no longer be perceived to be funny as they’re simply too complex. So why only three levels, if we are fine understanding nested metarepresentations of up to five levels? Dunbar et al. argue that this is because the audience needs to represent (1) the comedian intending to tell a joke (2), and that this is fundamentally different to reading nested meta-representations on paper.

Dunbar et al. investigated this using a sample of 65 out of 101 jokes that they took from a website that allowed visitors to up-vote jokes. As the paper repeatedly stresses, these jokes are indeed funny, and are used by some of the world’s best comedians. Although that only applies to some of the jokes, for the purposes of the study they should do a good-enough job (but more about that later), particularly, since the jokes were filtered and re-rated to ensure that they are culturally appropriate for the British audience.

The authors found that the jokes’ mental complexity peaked after five levels – if, as mentioned earlier, we account for the levels required to initiate communication, e.g. the audience representing the comedian’s intentions. After this level the jokes were not rated as funny anymore. So, effectively the jokes peaked after three levels. This would be an interesting result indicating that human mentalising is constrained by the levels of mental representations, and that this signature limit depends on the presentation of the information. However, I do not quite share Dunbar et al.’s intuitions about mental representations in jokes, as there are methodological concerns with this and previous research.

Problems of measuring mental representations

I’m not convinced by this being entirely driven by higher order mentalising, instead of a more general principle of higher cognitive demands. The authors did control for complexity as measured in number of words, but this does not actually entail other aspects of linguistic complexity. Sometimes, using more words, breaking up sentences, etc, might make it easier to comprehend a text. One might also argue that additional words can be used to build up expectations during a joke and extend the punchline, thereby making it funnier. Whilst the number of words are easily correlated to control for complexity, it isn’t the best one. This already seems to be a problem in previous studies that investigated higher order mental representations.

In previous research, Dunbar and colleagues (e.g. Stiller and Dunbar, 2007; Kinderman et al. 1998) asked participants to rate statements as true or false based on a previous story that they read. These statements were either on mental representations or factual knowledge. Participants did well for the control question up to a complexity of seven levels, but the performance for mental questions was at chance level after five levels. However, these differences mainly seem to have been driven by different levels of linguistic complexity. In our replication (O’Grady et al., 2015), we did not find the characteristic drop-off after the fifth level that they found in their study. However our study avoided a lot of ambiguous sentences and statements that riddled the original research and were more common in complex mental questions, compared to the control questions. Some of the statements used in the original studies were downright ambiguous. For example, one of the statements testing nested metarepresentation was that “Pete wanted Sam to know that he believed that Henry had intended not to mislead him.” – Does the anaphora “him” refer to Pete or Sam? Depending on how we interpret the sentence, it is either true or false.

The problem isn’t that participants would not be able to make the correct inference, but that they were additionally instructed to mark a statement as false if they were unsure. If the original statement was ambiguous, participants should be more likely to feel unsure and mark the statement as false, even though it is true. The wording and the coding of the task cannot capture the complexity of the stimuli. It seems that the test isn’t so much a test of people’s understanding of higher order mental representations, but grammatical complexity and ambiguity. As my collaborator Cathleen O’Grady will be able to testify, it’s pretty damn hard to write good stories and questions, and make sure that they are not ambiguous. To further avoid these issues, we presented two conflicting statements in a forced choice and asked for participants’ confidence in their rating, rather than marking questions that they were not sure about as false. Arguably, our design was slightly different to the original design in other ways, too. Ideally, this debate could be settled by directly comparing both sets of stories and questions in the same study and determining whether the key differences are driven by the stories or not. But back to the jokes.

The presence of comedian and audience adds extra levels of meta-representation.

My main concern with this paper is about its theoretical claim that we should expect jokes to peak at three levels. In the previous literature participants do well in metarepresentations of up to five levels. But now Dunbar and colleagues argue that the interaction of the comedian and the audience should be included in calculating the levels of intentionality. I can’t quite follow how it would be different to reading a text in a study, like Stiller and Dunbar’s, where the author wants the reader to understand x number of levels of intentionality. The relation between the comedian and the audience is not necessarily different to the author and the reader. Both intend to communicate a story of x levels of complexity. The reader of a written text should need to represent the authors’ intention, too. But maybe, for whatever reason, they do not need to do so.

However, taking this position, comprehension of jokes should be different when reading the jokes on paper, compared to being in the audience at a comedy event. If that was true, there should be a higher limit for written jokes, compared to those told to an audience. Dunbar and colleagues make compelling references to previous research indicating that written and acted text is easier to comprehend than narrated text, e.g. drama (which would be similar to our study) and other works of literature. They write that the comedian stands in the audience, retelling the joke, and thereby requiring those extra levels of intentionality. However, in their study, the jokes were rated in written form. Furthermore, many jokes do work just as well, if not better in written form (e.g. this one that is attributed to the well-known comedian Abraham Lincoln: If this is coffee, please bring me some tea. If this is tea, please bring me some coffee.”) Because of this, we should observe a signature limit of five levels instead of three, just like they found in the previous literature.

It would be possible to experimentally manipulate the complexity of jokes by telling them either in the first person or the third person, and thereby manipulating the levels of mental representation. If the phrasing of the joke matters, we should observe different signature limits depending on whether the jokes are written in the first or the third person. Likewise, jokes could be presented in written form and live narrated, similar to our study. If it is the presentation that matters and the authors are correct in arguing that the presence of a comedian affects the comprehension of jokes beyond the fifth level of intentionality, we would expect those jokes told in the third person to decline in perceived funniness once they surpass five levels of intentionality, compared to their borderline first person equivalents. But until we have tested this claim experimentally, I am not convinced by the findings.

References

Dunbar, R., Launay, J., and Curry, O. (2015). The complexity of jokes is limited by cognitive constraints on mentalizing. Human Nature, 1–11. doi: 10.1007/s12110-015-9251-6

Kinderman, P., Dunbar, R., and Bentall, R. P. (1998). Theory-of-mind deficits and causal attributions. British Journal of Psychology, 89(2), 191–204. doi: 10.1111/j.2044-8295.1998.tb02680.x

O’Grady, C., Kliesch, C., Smith, K., and Scott-Phillips, T. C. (2015). The ease and extent of recursive mindreading, across implicit and explicit tasks. Evolution and Human Behavior, 36(4), 2313–322. doi: 10.1016/j.evolhumbehav.2015.01.004

Stiller, J. and Dunbar, R. I. M. (2007). Perspective-taking and memory capacity predict social network size. Social Networks, 29(1), 93–104. doi: 10.1016/j.socnet.2006.04.001