Prof. Alfred Hubler is an actual mad professor who is a danger to life as we know it. In a talk this evening he went from ball bearings in castor oil to hyper-advanced machine intelligence and from some bits of string to the boundary conditions of the universe. Hubler suggests that he is building a hyper-intelligent computer. However, will hyper-intelligent machines actually give us a better scientific understanding of the universe, or will they just spend their time playing Tetris?

Let him take you on a journey…

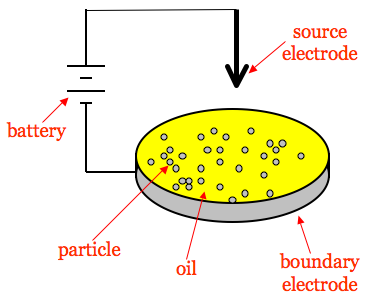

Hubler works on ‘arbitrons’ which are learning mechanisms similar to neural nets or perceptrons. They consist of a petri dish with a metal edge and a charged electrode hovering above it. Around a hundred tiny metal balls are placed in the petri dish and covered with a film of castor oil.

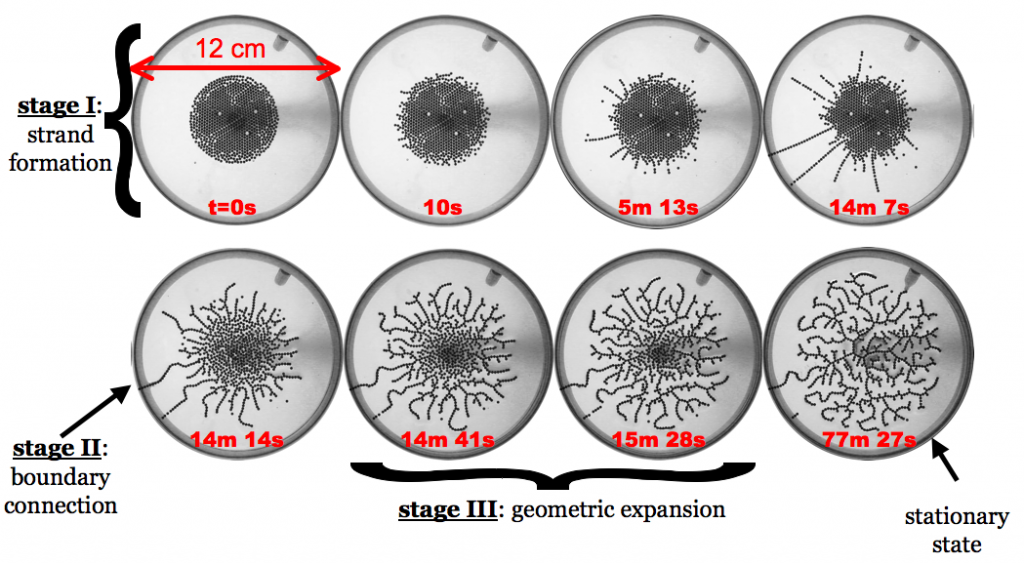

When the charge is large enough, the metal balls begin to move towards the edges. They do so in linked lines – several lines will compete to be the first to get to the edge and complete the circuit. When they do, they self-organise into a particular structure. The exact structure is impossible to predict, but the shape always converges to a tree network with particular properties:

- There are no closed loops

- 22% of the nodes will be leaf nodes

- 22% of the nodes will have 3 neighbours

- the rest of the nodes will have 2 neighbours

- It is self-repairing

This is easiest to demonstrate with a picture:

Although the exact pattern is unpredictable, there are statistical properties which are important for the functionality of the system which are reproducible. These include the percentage of nodes and overall topology. These are the things that scientists should be studying. In terms of Evolutionary Linguistics, this translates into phenomena like the effects of transmission bottlenecks and the relationship between social structure and language structure.

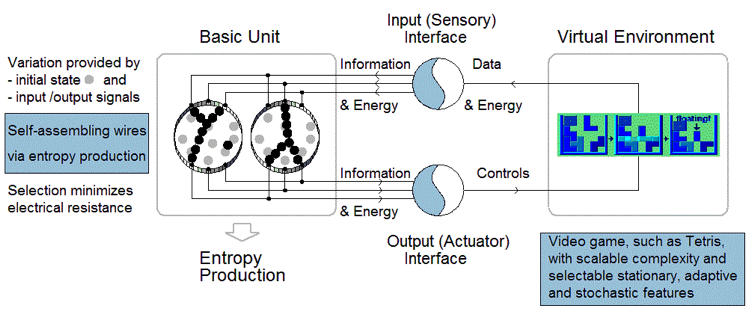

This is cool enough. However, these balls-in-a-petri-dish can learn. By adding more than one electrode which control a behaviour, and rewarding success at some aspect of the behaviour with a greater charge to one of the nodes, you can get a group of petri dishes to PLAY TETRIS:

This is Hebbian learning in a similar way to a how a neural net works. And indeed, a set of arbitrons can successfully learn to play Tetris (controlling the movement of blocks) or form logic gates. These work by harvesting energy gained from successful behaviour to form the basis of memory. You can then make many of arbitrons and set them floating in oil, to create a network of arbitrons. They may even evolve their own sensors using electro-magnetism and sensitivity to gravity.

Hubler argues that these kinds of networks are practically more powerful than neural networks, especially considering that you can make ones using nano-particles. In fact, he envisions these kinds of networks matching the processing capacity of the human brain (already working on a model which approximates 10% of the human brain), and eventually beyond: Manufactured components are more efficient than biological systems (e.g. super-conductance) and are free from constraints such as the need to reproduce. Hubler hopes that, eventually, these machines will help us gain deeper understanding of the universe.

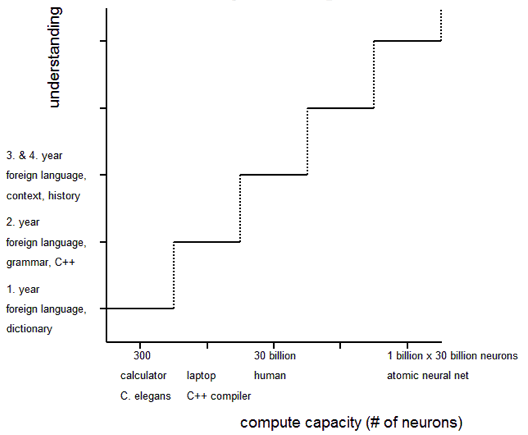

Hubler spent some time on his definition of understanding – he sees it as the ability to translate between things (e.g. the physical sun and the concept of a sun) or between abstract concepts, or between concepts at different levels. So, translating from the movements of the heavenly bodies to a set of mathematical principles counts as understanding. The level of understanding you can reach, therefore, depends on your computational capacity. Here’s an imaginary graph:

Interestingly, he used a linguistic metaphor: With increasing computational resources you can go from dictionary translations to grammar translations to full blown literary translation. Humans have more computational power than a calculator, but it’s just a graded scale. Furthermore, humans would not be able to translate their understanding to beings with lower computational complexity like an ant: They just won’t be able to comprehend the problems. Hubler imagines machines will eventually be able to perceive patterns at higher orders of understanding than us and perhaps solve problems that we can’t.

Here, I asked what the purpose of these machines were, given that even neural nets are difficult to analyse and that, if his metric is correct, the machines will not be able to communicate their discoveries to us. He replied saying “Building these is inevitable, someone else would do it if we didn’t”. Like I said, actual mad scientist.

So, ultra machine intelligence – the singularity is achievable. However, I have two points of contention, or rather tempering statements:

First, although it seems impressive that humans can translate between two languages, the brain adapted to do this and language adapted to being processed by the brain. In one sense, it’s no wonder that out brains can do language – the brain invented language. If you could scale the complexity of the understanding by how adapted the computation device was, perhaps a bee translating a bee dance into food co-ordinates would be just as complex as a human translating a poem. However, it’s obvious that some individuals, with great effort can gain new insights about the relevant, reproducible statistical properties of phenomena, like Newton. This may scale up if the network components can be more numerous and work faster.

This leads to my second point – brains are good at cultural learning – language, behaviour, manipulation, gossip etc. Children learn language easily and reliably. They also gain an understanding of social cues intuitively. This is no surprise – humans have adapted for this, and cultural phenomena have adapted to humans. This is what Chater and Christiansen (2010) argue: Cultural induction is easy because it has adapted to our intuitive biases through a feedback loop. On the other hand, natural induction – reasoning about universal physical properties of the universe like gravity – is difficult because the solutions do not necessarily conform to our intuitions (I’ve actually argued against this here).

My question is – if more beings with more computational resources become more culturally sophisticated – and adapted to culture – is there any reason to think that hyper-intelligent machines will spend more resources on solving natural problems?

In other words, giving machines greater computational power may make them more complex translators, but they may start demanding more holidays.

This might be true for two reasons. First, as Chater and Christiansen suggest, problems with a feedback loop – such as cultural problems – are easier to solve. Since these machines will presumably be able to affect their environment, they will no doubt construct their own culture. Secondly, since they themselves have increasing cultural complexity, there is an increasing number of cultural phenomena to study in the world. Given the machines have limited resources, to what extent will they invest them into natural induction?

I’ve been talking about these machines as if there will be a whole society of them created, but even just one could form a culture. It’s an open question how to define an individual in a hyper-intelligent neural net. The whole net may evolve specialised modules, like species adapting to niches, or multiple agents like a society. Furthermore, different modules may compete for energy harvesting between themselves, causing an arms race for investing resources into self-competition. This is what we often see in human societies – companies and species co-evolve to out-survive each other and societies invest more in war than research.

Of course, despite this, humans have actually made some progress in the understanding of science. Is there a way to quantitatively compare the rates of complexity between natural and cultural scaling? The work of Geoffrey West may be one way to do this: West studies scaling laws in natural and cultural phenomena. A striking number of variables scale according to a 3/4 scaling law known as Kleiber’s law. For instance, body mass and metabolic rate or number of blood capillaries and the number of cells that they supply. So the heartbeat of an animal slows as it gets bigger, meaning that mice have shorter lifespans than blue whales. This has been traced to the principles of energy dissipation. The scaling coefficient below one means that, as systems get bigger, they require proportionately more energy to metabolise enough. That is, scaling in natural systems is not sustainable. However, it has been discovered that cultural measures scale with a coefficient greater than one, suggesting that cultural measures increase exponentially with scale. For instance, wages grow exponentially with city population. West argues, therefore, that cultural growth is sustainable – even exponential. Of course, in order to avoid a singularity, paradigm shifts are required.

Good news for the environment, perhaps, but maybe bad news for understanding gained from hyper intelligent machines. If we compare how observable patterns scale with computational complexity, it suggests that cultural patterns scale exponentially, while accessible patterns in natural phenomena may shrink proportionately. That is, the machines will spend more time doing cultural induction than natural induction. This may lead to self-domestication, as it did in humans, and shield the machines from natural selection pressures. If they are shielded in this way, they cannot adapt to natural problems and so will not find solutions to them.

Hubler argued against this point by suggesting that the machines, because they utilise technology which is free from biological constraints, will be able to create paradigm shifts and produce advanced understanding. I actually agree – all evidence shows that humans, despite increasingly sophisticated society, are gaining a deeper understanding of the universe. I guess my point is, don’t expect the machines to be hyper-lab-geek-researchers. Not unlike Iain M. Banks’ view of the Culture, they will have their own culture and concerns that will be incomprehensible to us.

Not that this will stop Alfred Hubler.

Sperl, M., Chang, A., Weber, N., & Hübler, A. (1999). Hebbian learning in the agglomeration of conducting particles Physical Review E, 59 (3), 3165-3168 DOI: 10.1103/PhysRevE.59.3165

Bettencourt LM, Lobo J, Helbing D, Kühnert C, & West GB (2007). Growth, innovation, scaling, and the pace of life in cities. Proceedings of the National Academy of Sciences of the United States of America, 104 (17), 7301-6 PMID: 17438298

West, G. (1997). A General Model for the Origin of Allometric Scaling Laws in Biology Science, 276 (5309), 122-126 DOI: 10.1126/science.276.5309.122

Chater N, & Christiansen MH (2010). Language acquisition meets language evolution. Cognitive science, 34 (7), 1131-57 PMID: 21564247

—

Edit: My sincere apologies to Prof. Hubler for originally spelling his name as ‘Hulber’.

This is fun and interesting, but the reality is that 40+ years of research have failed to produce anything like artificial intelligence. Hubert Dreyfus’ critique “What Computers Still Can’t Do” (MIT Press, 1992) still applies 20 years on. We don’t even have a robot that can find its way out of a room full of furniture with and open door.

It also appears that Moore’s Law was repealed several years ago. If it were still in effect, we would have cpus in our desktop computers operating at 15 to 20 GHz.

All-in-all, the “Singularity” does not appear imminent. Actually, we should hope that the Ecofascists don’t shut down all energy and industrial production and cast us into another Dark Age.

As an ecofascist working in AI I actually believe that mankind totally deserves another Dark Age, and that it’s long due. I’m also not feeling too sorry about it.

The arbitron stuff is pretty cool, except that maybe the conception of ‘intelligence’ as abstract computation or logical reasoning (although not as extreme as in other computational approaches) might not be the most useful one with regard to human-understandable or cultural ‘intelligence’ (you probably know where I’m going…

I had thought to reference the mind/body connection (I didn’t know of the Wikipedia link), but the comment was over long.

As to being both and Ecofascist and an AI researcher: Good Grief, Charley Brown! I am reminded of the Knight in the Faerie Queen riding off in all directions at once.

Wonderful article ! How ever The technological singularity is a hypothetical event in which an up gradable intelligent agent enters a ‘runaway reaction’ of self-improvement cycles, with each new and more intelligent generation appearing more and more rapidly, causing an intelligence explosion and resulting in a powerful super intelligence that would, qualitatively, far surpass all human intelligence.

Jafar