Note: Late on the evening og 7.20.15: I’ve edited the post at the end of the second section by introducing a distinction between prediction and explanation.

Thinking things over, here’s the core of my objection to talk of free-floating rationales: they’re redundant.

What authorizes talk of “free-floating rationales” (FFRs) is a certain state of affairs, a certain pattern. Does postulating the existence of FFRs add anything to the pattern? Does it make anything more predictable? No. Even considering the larger evolutionary context in which talk of FFRs adds nothing (p. 351 in [1]):

But who appreciated this power, who recognized this rationale, if not the bird or its individual ancestors? Who else but Mother Nature herself? That is to say: nobody. Evolution by natural selection “chose” this design for this “reason.”

Surely what Mother Nature recognized was the pattern. For all practical purposes talk of FFRs is simply an elaborate name for the pattern. Once the pattern’s been spotted, there is nothing more.

But how’d a biologist spot the pattern? (S)he made observations and thought about them. So I want to switch gears and think about the operation of our conceptual equipment. These considerations have no direct bearing on our argument about Dennett’s evolutionary thought, as every idea we have must be embodied in some computational substrate, the good ideas and the bad. But the indirect implications are worth thinking about. For they indicate that a new intellectual game is afoot.

Dennett on How We Think

Let’s start with a passage from the intentional systems article. This is where Dennett is imagining a soliloquy that our low-nesting bird might have. He doesn’t, of course, want us to think that the bird ever thought such thoughts (or even, for that matter, perhaps thought any thoughts at all). Rather, Dennett is following Dawkins in proposing this as a way for biologists to spot interesting patterns in the life world. Here’s the passage (p. 350 in [1]):

I’m a low-nesting bird, whose chicks are not protectable against a predator who discovers them. This approaching predator can be expected soon to discover them unless I distract it; it could be distracted by its desire to catch and eat me, but only if it thought there was a reasonable chance of its actually catching me (it’s no dummy); it would contract just that belief if I gave it evidence that I couldn’t fly anymore; I could do that by feigning a broken wing, etc.

Keeping that in mind, lets look at another passage. This is from a 1999 interview [2]:

The only thing that’s novel about my way of doing it is that I’m showing how the very things the other side holds dear – minds, selves, intentions – have an unproblematic but not reduced place in the material world. If you can begin to see what, to take a deliberately extreme example, your thermostat and your mind have in common, and that there’s a perspective from which they seem to be instances of an intentional system, then you can see that the whole process of natural selection is also an intentional system.

It turns out to be no accident that biologists find it so appealing to talk about what Mother Nature has in mind. Everybody in AI, everybody in software, talks that way. “The trouble with this operating system is it doesn’t realize this, or it thinks it has an extra disk drive.” That way of talking is ubiquitous, unselfconscious – and useful. If the thought police came along and tried to force computer scientists and biologists not to use that language, because it was too fanciful, they would run into fierce resistance.

What I do is just say, Well, let’s take that way of talking seriously. Then what happens is that instead of having a Cartesian position that puts minds up there with the spirits and gods, you bring the mind right back into the world. It’s a way of looking at certain material things. It has a great unifying effect.

So, this soliloquy way of mind is useful in thinking about the biological world and something very like it is common among those who have to work with software. Dennett’s asking us to believe that, because thinking about these things in that way is so very useful (in predicting what they’re going to do) that we might as well conclude that, in some special technical sense, they really ARE like that. That special technical sense is given in his account of the intentional stance as a pattern, which we examined in the previous post [3].

What I want to do is refrain from taking that last step. I agree with Dennett that, yes, this IS a very useful way of thinking about lots of things. But I want to take that insight in a different direction. I want to suggest that what is going on in these cases is that we’re using neuro-computational equipment that evolved for regulating inter personal interactions and putting it to other uses. Mark Changizi would say we’re harnessing it to those other purposes while Stanislaw Dehaene would talk of reuse. I’m happy either way.

Method of Loci and Beyond

Let’s start with a more tractable example. One very useful neuro-computational system is the navigation system, which obviously is quite ancient. Every animal has move about in the world and so needs computational means to guide that movement. The animal’s life depends on this machinery, so it must be robust.

Now consider an ancient memory technique known as the method of loci (described in [4]):

Choose some fairly elaborate building, a temple was usually suggested, and walk through it several times along a set path, memorizing what you see at various fixed points on the path. These points are the loci which are the key to the method. Once you have this path firmly in mind so that you can call it up at will, you are ready to use it as a memory aid. If, for example, you want to deliver a speech from memory, you conduct an imaginary walk through your temple. At the first locus you create a vivid image which is related to the first point in your speech and then you “store” that image at the locus. You repeat the process for each successive point in the speech until all of the points have been stored away in the loci on the path through the temple. Then, when you give your speech you simply start off on the imaginary path, retrieving your ideas from each locus in turn. The technique could also be used for memorizing a speech word-for-word. In this case, instead of storing ideas at loci, one stored individual words.

Now consider some comments by Irving Geis, an artist who created images of complex biomolecules (continuing in [4]):

In a personal interview, Geis indicated that, in studying a molecule’s structure, he uses an exercise derived from his training as an architect. Instead of taking an imaginary walk through a building, the architectural exercise, he takes an imaginary walk through the molecule. This allows him to visualize the molecule from many points of view and to develop a kinesthetic sense, in addition to a visual sense, of the molecule’s structure. Geis finds this kinesthetic sense so important that he has entertained the idea of building a huge model of a molecule, one large enough that people could enter it and move around, thereby gaining insight into its structure. Geis has pointed out that biochemists, as well as illustrators, must do this kind of thinking. To understand a molecule’s functional structure biochemists will imagine various sight lines through the image they are examining. If they have a three-dimensional image on a CRT, they can direct the computer to display the molecule from various orientations. It is not enough to understand the molecule’s shape from one point of view. In order intuitively to understand it’s three-dimensional shape one must be able to visualize the molecule from several points of view.

Continuing on:

There is a strong general resemblance between Geis’s molecular walk and the method of loci. In both cases we have not only visual space, but motoric space as well. The rhetorician takes an imaginary walk through an imaginary temple; Geis treats the molecule as through it were an environment and imagines how it would be to walk through that environment. The strong resemblance between these two examples suggests that they call upon the same basic cognitive capacity.

Ulric Neisser [5], in his discussion of the method of loci, suggests that it is derived from the schemas we have for navigating in the world. The rhetorician uses these navigational schemas as a tool for indexing the points in a speech; Geis uses them to explore the complex structure of large molecules. To use an analogy from computer science, it is as though Geis took a program for navigation and applied it to data derived, not from the immediate physical environment, but from molecular biology. Similarly, the rhetorician applies the program to the points of a speech he or she wishes to memorize. The program is the same in all three cases. Only the data on which the program operates is different.

I do not know whether anything has been done to prove out Neisser’s suggestion, which dates back to 1976. But I find it plausible on the face of it. What I’m suggesting is that something like that is going on when Dawkins provides adaptive soliloquies or when computer scientists and software engineers craft mentalist scenarios for computers.

And there is some experimental evidence for the reuse or harnessing of social cognition for other purposes. I’m thinking of the well-known paper by Laurence Fiddick, Leda Cosmides, and John Tooby, “No interpretation without representation: The role of domain-specific representations and inferences in the Wason selection task” [6]. The work is complicated and so I’m not going to attempt a summary. Sufficient to say, what the research shows is that a problem that many people find difficult to solve when it is presented as one of context-free logical inference becomes tractable when it is presented in the form of relationships between people. Our neuro-computational machinery for social cognition has facilities that can be used to solve other kinds of problems providing those problems are presented in a way that fits the recognition requirements of this computational space.

In this view, thinking about evolutionary scenarios or computational issues as problems of social interaction or even self cognition is useful because it taps into powerful mental facilities. Whether or not phenomena one is thinking about actually are (full-blown) intentional systems is, on this account, irrelevant. On this account, that issue requires further argument and investigation.

As we’ve seen in my previous post [3] Dennett provides a further argument in the form of reasoning about patterns. However, near the end of his 1983 intentional systems argument [1], he also notes this (p. 388):

Consider, for instance, how Dawkins scrupulously pauses, again and again, in The Selfish Gene (1976) to show precisely what the cash value of his selfishness talk is.

This goes somewhat beyond Dennett’s pattern argument, for it suggests that, given the intentional pattern, we’ve not got to ache it out in other terms. In the case of free-floating rational, I don’t see what Dennett’s concept gets us. There’s no need to cash it out because, as I’ve said above, it’s simply redundant. An adaptive account gets along fine without mentioning FFRs. Or, if you will, that adaptive account Is the cashing-out.

I note, however, that we need to make a distinction between prediction and explanation. Let’s forget the intentional level and consider the purely physical level. It is one thing to be able to predict the motions of the planets. It is something different to explain them. Prediction is a matter of having the appropriate mathematics while explanation requires that we derive the mathematics from underlying physical laws and principles. It wasn’t until Newton that we were able to derive the predictive mathematics from physical principle.

The biological world is, of course, different from the inanimate physical world. But I suggest that the distinction between being able to predict and being able to explain remains valid. It is one thing to predict accurately that a mother of helpless chicks will act wounded in the presence of a predator that is too close to the next. To explain that we need behavioral and evolutionary costs and benefits of various behaviors among the actors in this scenario and assure ourselves – in part through recourse of evolutionary game theory – that the observed behaviors are plausible given known mechanisms.

This brings us to Dennett’s conception of memes as active cultural agents. I’m not even sure what it is that Dennett claims to predict in various cases, but beyond that, as far as I can tell, it seems to me that he has simply given-up on explaining active memes in any but analogical terms (computer viruses or apps, which I’ve addressed elsewhere [7, 8]). I want to end by suggesting that one reason Dennett has given up on cashing out his meme-idea is that, for all his insistence on the mind as computer, he doesn’t have a very rich or robust concept of the computational (aspect of) mind.

Dennett can’t grasp the mind, he can only point at it

Though I’ve certainly not read all that Dennett has written on the computational mind, I’ve not see him make use of actual computational models in the work I’ve read. He doesn’t actually work with the kinds of models proposed in AI or cognitive science. I think that leaves him with very thin computational intuitions.

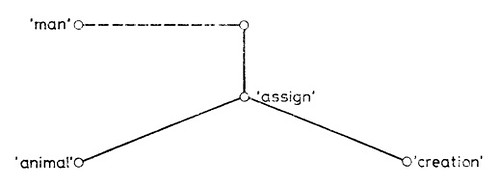

What do I mean? When I first met David Hays he had just finished an article he which he used a knowledge representation formalism to analyze various concepts of alienation [9]. Here’s one diagram from that article.

It’s from a series of diagrams he used to develop a representation of Marx’s concept of alienation. In such diagrams, known as a semantic or cognitive network, the nodes represent concepts while the edges (that is, the lines between the nodes) representation relations between those concepts. While that’s a simple diagram, it’s only one of about a half-dozen Hays used to develop Marx’s concept. Taken together, they’re somewhat more complex, and even then, they assumed a mass of other linked concepts.

I don’t know exactly what cognitive science Dennett was reading back in the 1970s and 80s, but I think it’s safe to assume that he saw such diagrams as they were quite common back then, the days of “good old fashioned artificial intelligence” (GOFAI) when mental representations were carefully hand-coded. But it’s one thing to read such diagrams and even to study then with some care. It’s something else to work with them. Doing that requires a period of training. I have no reason to believe that Dennett undertook that step. If not, then he lacks intuitions; intuitions that might well have changed how he thinks about the mind. It’s one thing to believe, to really and deeply believe, that those cognitive science folks know what they’re doing when they construct such things – after all, they do run in simulation programs don’t they? It’s something else entirely to be able to construct such things yourself.

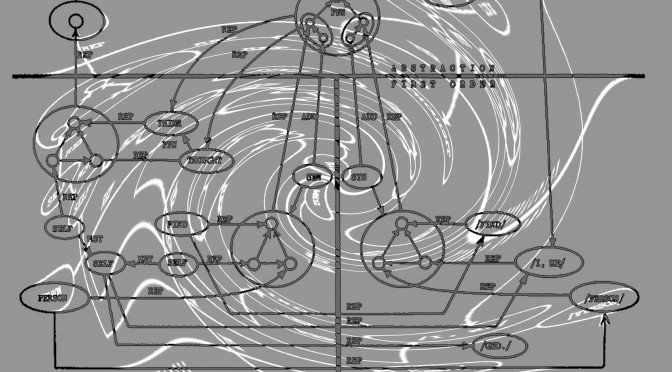

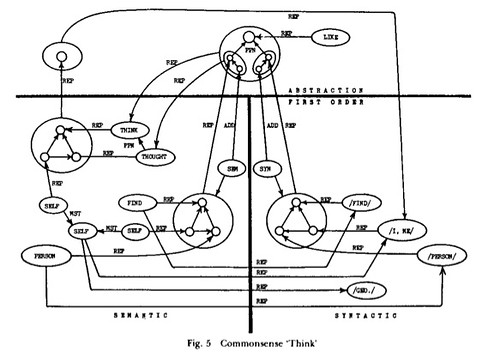

Now look at this rather more complex diagram from an article I wrote about a Shakespeare sonnet [10]:

Like Hays’s diagram, it’s one in a series and you need the whole pile of them to do the intended job, in this case provide a partial semantic foundation for a sonnet. This diagram represents (my cognitive account of) the folk psychological notion of thinking, which I interpret as meaning something like inner speech. I choose it for this post because thinking, like believing and desiring, is one of those things for which Dennett invented the intentional stance. If you look over there at the lower left you’ll see a node I’ve labeled “self.” But that I did not mean the entirety of what we sometimes call THE SELF, but only that simple cognitive entity to which one’s name is attached. As for THE SELF, think of it as a peal and that humble node is the grain of sand around which the oyster secretes the peal. It’s not the whole of THE SELF, but only a starting point.

Why would you mount an intentional argument when such diagrams are what have seized your attention? What would Dennett’s intentional stance tell you? Nothing, I warrant, nothing. Of course, your construction might well be completely wrong – some years later I took a more sophisticated approach to the self [11] – but you can take another approach.

When the game has moved on to the construction of such diagrams as representations of mental apparatus, it is not at all clear to me what work there is to be done by the traditional equipment of analytic philosophy. It’s not that I think that such diagrams provide definitive answers to questions about the self, intentionality, beliefs and desires, and so forth. Rather, they exist in a different universe of discourse, one with different problems and challenges.

That other universe, I submit, is where the game is today or, if not quite there, that’s where it’s headed. In this universe one constructs explicit models of conceptual equipment. One constructs explicit models of our navigational system or our social cognition system. And one can readily conceive of the mind itself as an environment to which concepts must adapt if they are to be taken-up, used, and therefore circulated.

References

[1] Daniel Dennett. Intentional systems in cognitive ethology: The “Panglossian paradigm” defended. Brain and Behavioral Science 6, 1983: 343-390. URL: https://www.academia.edu/7762681/Beyond_Quantification_Digital_Criticism_and_the_Search_for_Patterns

[2] A Conversation with Daniel Dennett, Harvey Blume, Digital Culture, The Atlantic Magazine, 9 December 1998. URL: http://www.theatlantic.com/past/docs/unbound/digicult/dennett.htm

[3] Bill Benzon. Dan Dennett on Patterns (and Ontology). New Savanna (blog), July 20, 2015. URL: http://new-savanna.blogspot.com/2015/07/dan-dennett-on-patterns-and-ontology.html

[4] William Benzon. Visual Thinking. In Allen Kent and James G. Williams, Eds. Encyclopedia of Computer Science and Technology. Volume 23, Supplement 8. New York; Basel: Marcel Dekker, Inc. (1990) 411-427. URL: https://www.academia.edu/13450375/Visual_Thinking

[5] Neisser, Ulrich. Cognition and Reality. W. H. Freeman, San Francisco, 1976.

[6] Laurence Fiddick, Leda Cosmides, John Tooby. No interpretation without representation: The role of domain-specific representations and inferences in the Wason selection task. Cognition 77 (2000) 1-79. URL: http://www.cep.ucsb.edu/papers/socexrelevance2000.pdf

[7] Bill Benzon. Cultural Evolution, Memes, and the Trouble with Dan Dennett. Working Paper, 2013: pp. 16-20, 49-50 URL: https://www.academia.edu/4204175/Cultural_Evolution_Memes_and_the_Trouble_with_Dan_Dennett

[8] Bill Benzon. Dennet’s WRONG: the Mind is NOT Software for the Brain. New Savanna (blog), July 20, 2015. URL: http://new-savanna.blogspot.com/2015/05/dennets-wrong-mind-is-not-software-for.html

[9] David Hays. On “Alienation”: An Essay in the Psycholinguistics of Science. In (R.R. Geyer & D. R. Schietzer, Eds.): Theories of Alienation. Leiden: Martinus Nijhoff, (1976) pp. 169-187. URL: https://www.academia.edu/9203457/On_Alienation_An_Essay_in_the_Psycholinguistics_of_Science

[10] William Benzon. Cognitive Networks and Literary Semantics. MLN 91: 952-982, 1976. URL: https://www.academia.edu/9203457/On_Alienation_An_Essay_in_the_Psycholinguistics_of_Science

[11] William Benzon. First Person: Neuro-Cognitive Notes on the Self in Life and in Fiction. PsyArt: A Hyperlink Journal for Psychological Study of the Arts, August 21, 2000. URL: https://www.academia.edu/8331456/First_Person_Neuro-Cognitive_Notes_on_the_Self_in_Life_and_in_Fiction