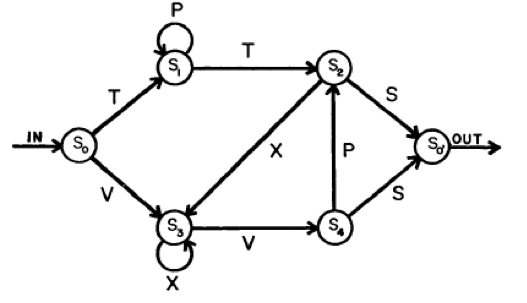

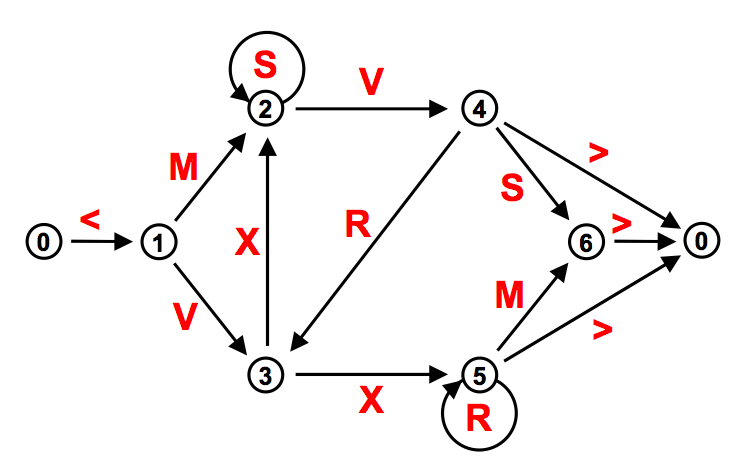

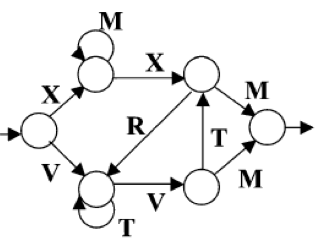

Recently, I’ve been attending an artificial language learning research group and have discovered an interesting case of cultural inheritance. Arthur Reber was one of the first researchers to look at the implicit learning of grammar. Way back in 1967, he studied how adults (quaintly called ‘Ss’ in the original paper) learned an artificial grammar, created from a finite state automata. Here is the grand-daddy of artificial language learning automata:

It’s simple enough to understand when you see it, but allows enough complexity that inferring it from a set of strings is fairly difficult. The experiment was seminal, and has a big following (Google clocks almost 1000 citations of the 1967 paper alone). In fact, other studies of implicit grammar learning were keen to replicate it. Thus, a lineage of experiments inherited their stimuli from previous research. This is understandable, of course. When designing experiments, mistakes are costly, so it’s always a good idea to use things that have worked before.

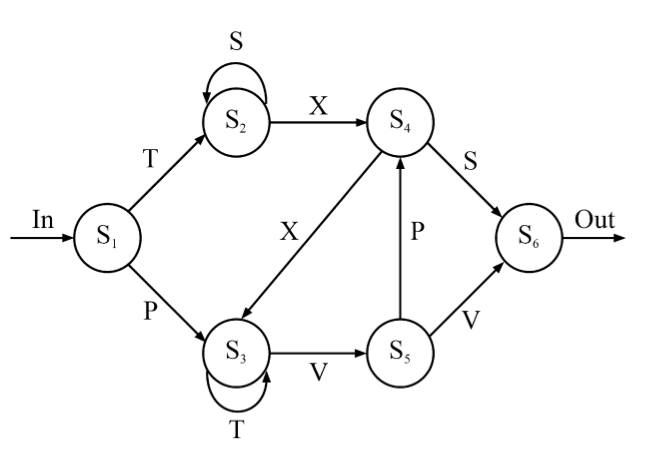

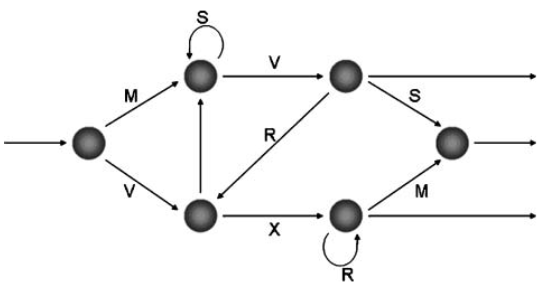

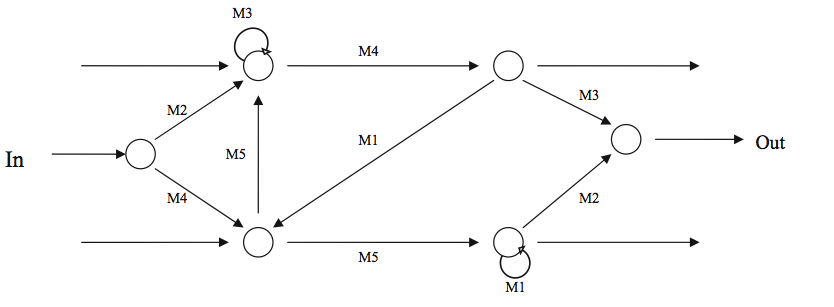

Here are some finite state grammars I found for other studies of implicit learning. The experiments range from the traditional paradigm to neuro-imaging studies and the grammars produce things from letters to words, symbols, music and even dance moves.

These are almost exactly same as Reber’s original. Is science in danger of in-breeding?

Perhaps not: There are some ‘mutations’, for instance the self-loop moving to the second node at the bottom and the possibility of ending on any of the three rightmost nodes. However, the general qualities remain.

The disturbing thing about all of this is that, as far as I can tell, Reber’s initial finite state automata was mostly arbitrary. The diagonal link between S2 and S3 in the original diagram, while it introduces some complexity, has no rational basis. What if Reber got lucky? What if you get completely different results using other automata? Having said this, I don’t know much about finite state automata – how different can 6-node graphs actually get?

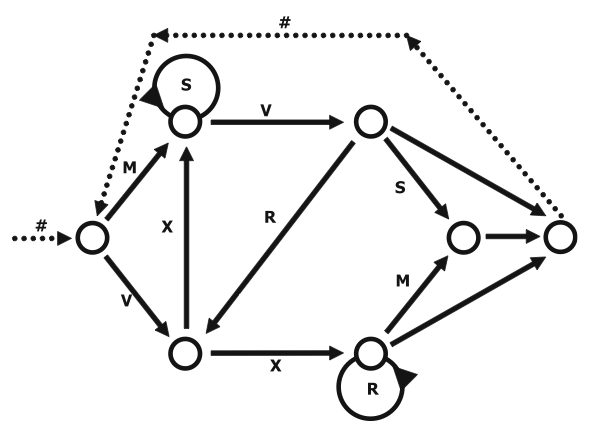

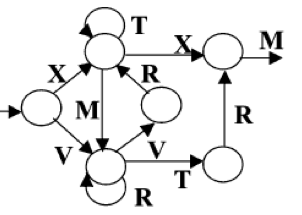

Of course, other automata have been tested. Here’s one from Conway & Christiansen (2006):

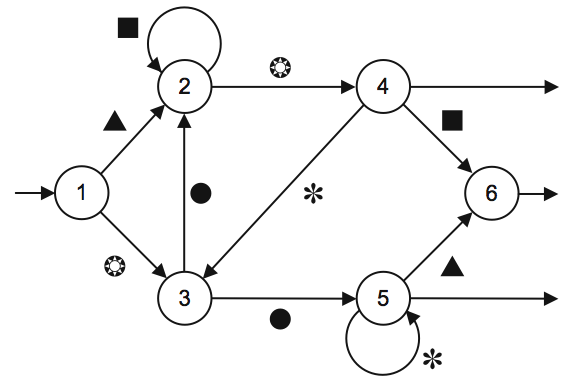

However, they use two grammars and guess what the second one is? That’s right:

I’m worried because studies may inherit the assumptions of their predecessors, possibly skewing the field. I’m coming up against this problem in my own research. I’m using non-words and, at first, I generated my own using the English Lexicon Project website. However, there were lots of problems and I ended up using non-words from another study. It seemed like a good idea, but today one of my subjects said that they were taking cues from word length. Uh oh. It seems that there’s a fine balance to be struck between credibility and innovation.

A. Reber (1967). Implicit learning of artifical grammars Journal of Verbal Learning and Behavior, 6, 855-863

There’s a good chapter by DeKeyser in the 2003 Handbook of second language acquisition that goes through the history of the interpretation of these implicit learning studies. People generally perform better than chance in them, but not perfectly. The big sticking point is whether this is because at some level they’ve internalized the FSG, or instead they’ve just picked up on some salient surface features. Possibly it’s a bit of both, but it’s very difficult to separate the two.

Sean:

The grammars were arbitrary, utterly beside the point which was to examine how individuals pick up patterns and structure while being unaware of both the process and products of learning. The grammars were simply convenient devices for generating lots of structured stimuli.

But thanks for noticing my (early) stuff.

Arthur